"Can’t AI Just Figure It Out?" Or How We Taught AI to Query Our Database

Can’t AI just figure it out? I thought so too until I wrote a 100th tool to fetch data. So we really let AI figure it out for itself - decide what fields it needs and how to filter while we relax

Motivation 🚀

At Squirrel we believe in empowering hiring managers to access and control hiring data effortlessly in whatever way suits them best. That's why we built our AI Chat Assistant - a hiring manager's helper that can answer questions about the data and take actions on your behalf.

Today we'll dive into how it answers questions about your data and the challenges we faced along the way.

The Problem with LLMs and Data

LLMs are improving at processing structured data, but they're still far from perfect. To get reliable answers we need to guide and help them along the way - minimising the amount of data they need to analyze and reducing the number of decisions they have to make.

For example, how does an LLM go about answering a request "List all candidate names in alphabetical order"?

We could give the LLM full access to the Candidate table, which contains names, emails, phone numbers, and others... that's too much information. Instead, we should probably return an already sorted list of just names so that LLM doesn't have to look at other data and doesn't need to sort the list.

Sounds obvious right? But in practice, does our AI assistant need a special tool just for fetching names alphabetically? What else? Returning phone numbers would be nice too, emails too. Or how about finding candidates given a specific skill?

We could easily build each one of those tools, but that quickly becomes overwhelming. This is AI, damn it, shouldn't it just figure it out?

💡 That's exactly what we've done!

Core Challenges

- Hard Errors - Sometimes too much data is just that, too much data for the model to figure out

- Hallucinations - LLMs are pretty nice at reasoning, but they can still invent data that doesn't exist

- Token Usage - Sending too much data increases cost AND slows things down

Our Solution: Smarter Queries, Less Guesswork 🛠️

We've built an AI-powered query tool that lets the LLM dynamically request the data it needs, instead of dumping everything at once.

For example, if a hiring manager asks: "List all job titles in my organisation". Instead of exposing the entire Job table, the AI only requests the "title" field while filtering by organizationName = <Your Org>.

This way we minimize unnecessary data exposure, leading to faster and more accurate results.

We're still refining this and preparing to launch this in production soon, but we've got a few kinks to work out! Especially when it comes to understanding the relationships between models, e.g. linking candidate applications to jobs. We're excited to share our progress and would love to hear your thoughts on this approach!

Our tech stack

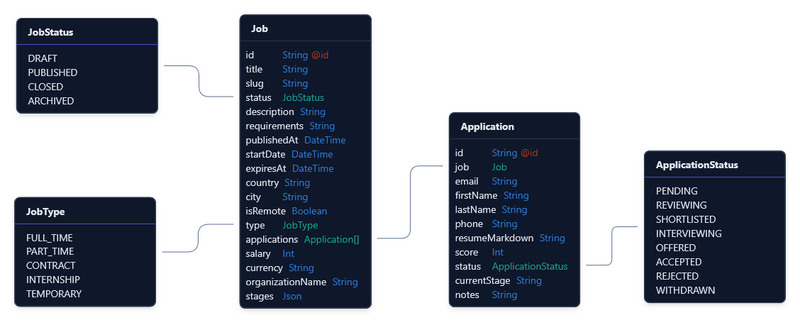

For the purposes of this demo, we're working with a reduced data model and a slice of our tech stack:

- Next.js

- PostgreSQL Database

- Prisma ORM

- Vercel AI SDK

- AI models: OpenAI GPT-4o and 4o-mini

How the data is going to be structured:

We've simplified our data model to just this slice here - Jobs and Job Applications and thus made uncomfortably wide tables with too many fields on them, but perhaps that's even better for our demo - more fields for AI to choose from!

Each Job record represents an open position with key details like location, salary, and some additional things like job requirements and description. Each job is linked to an organisation.

Each Application is linked to a Job, it contains candidate-specific information like their personal details, and some additional things like their CV and score they got for the current stage.

Our Approach: From Chaos to Smart Queries 🧩

Building an AI assistant that can query data efficiently is harder than it might originally seem. We went through multiple iterations before reaching our current approach, and I'm sure there's plenty of room for improvement still! Here's the journey so far:

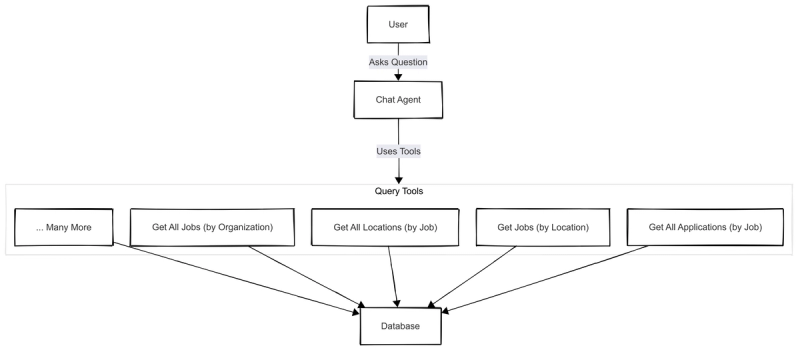

1) The v1 "Naive" first approach: Too many tools, too much data

At first, we kept it simple:

- One main AI agent that receives user questions

- Pre-built query tools that retrieve data from the database

For example, this top-level agent has access to tools like:

- Get all jobs, given an organization

- Get all locations, given a job

- Get all jobs, given a location

- Get all applications, given a job ... and many many more

So what's wrong with this?

- Too many tools - at some point we just had to ask "How many of these damn tools are we going to write?".

- LLM got overwhelmed - The AI struggled when retrieving too much data. For example, one time when returning 93 candidate names, it counted that there were 100 of them, and yet still only managed to return just about 40, while repeating one name to make up the 100.

- Token usage skyrocketed - Somehow dumping almost raw data to LLM wasted tokens and slowed the responses!

There are some optimisations we did on top of this approach. For example, returning just IDs in some instances and having other tools to look up specific information given the ID that was returned by a previous tool... but these only helped marginally and it was clear we needed to switch gears.

Verdict: 🚨 Unscalable, inefficient, and frustrating.

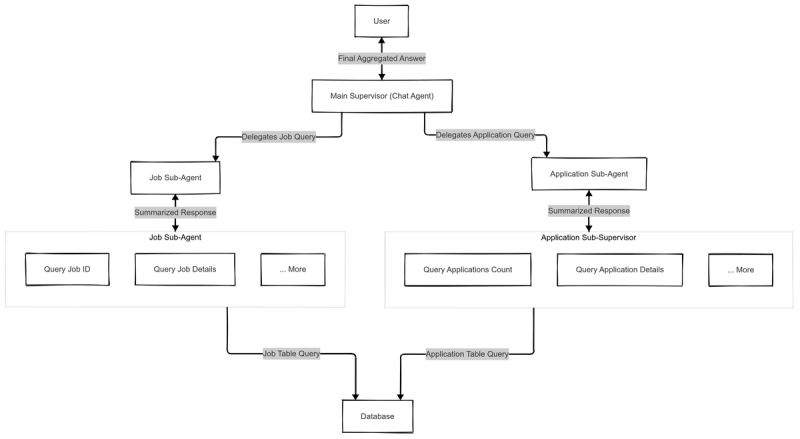

2) Version 2: Sub-Agents for each Data Model

To reduce the amount of data that the main agent has to deal with, we split tools into their own sub-agents. Each is responsible for a specific database model.

How it works

- The Main Supervisor receives user questions

- It delegates smaller questions to sub-agents based on the relevant data model that can answer the question

For example for the question "How many applications in a Junior Engineer job?", instead of one massive query the system breaks it into two questions:

- Job sub-agent, what is the ID of the "Junior Engineer" job?

- Application sub-agent, how many applications exist for Job ID = X?

Each of the sub-agents still has pretty much the same tools listed above, but instead of returning raw data to the main supervisor it answers the intermediate question and returns a text response. The main supervisor collects all responses and instead of interpreting rows of data it looks at the text and summarises that into a final answer.

What improved?

- AI doesn't get overwhelmed as easily anymore

- Queries are more structured and focus on specific models

- Better organisation - each sub-agent is a collection of tools designed to answer questions about a specific slice of the hiring data

Still not great because...

- AI still gets overwhelmed - sub-agents still struggle when retrieving lots of data

- We still have too many tools, just distributed differently

- Token usage only improved very slightly but still remained high

Verdict: 🟡 Better, but still not scalable. We were just shifting complexity around.

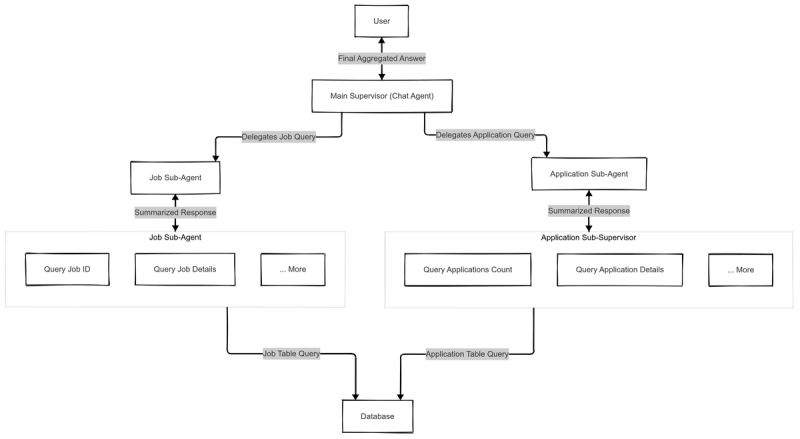

Version 3 - The Smart Model Query Approach (What this blog is about!)

Speaking of shifting complexity, why don't we make the ORM queries like really complex?

What we're doing

- We're keeping the sub-agents, this structure works well and the idea makes me feel warm inside

- BUT! We're removing the pre-defined tools from sub-agents and empowering them to make their own choices

- Now sub-agents dynamically generate custom queries - AI decides which fields to fetch and how to filter the data

For the same example question as in the previous section: "How many applications for a Junior Engineer job?". The main supervisor still has access to two subagents and it still asks the same questions:

1) "Job sub-agent, what is the job id of the Junior Engineer job?"

Job sub-agent sees this question and constructs a query requesting just the id field returned while filtering on title="Junior Engineer". It returns only the job id instead of the full Job record.

1filter_fields = {

2 filter_name = title

3 filter_type = equals

4 filter_value = "Junior Engineer"

5}

6

7requested_fields = ["id"]2) "Applications sub-agent, how many applications in the job?".

The Applications sub-agent constructs a query requesting the application "id" field while filtering applications with jobId= returned from the previous tool call. This does currently return a list of all IDs, leaving LLM to do the counting. We've got a version that is able to request .count() operation from Prisma, but it's a little janky and needs more work.

1filter_fields = {

2 filter_name = jobId

3 filter_type = equals

4 filter_value = <job_id>

5}

6

7requested_fields = ["id"]

So What? Why This Matters

We've come a long way from our first implementation of the AI Assistant to the smart query system we're experimenting with now. While the technical improvements are exciting the real impact goes beyond just reducing token usage.

By empowering sub-agents to dynamically filter and request only the necessary data, we've achieved:

1) 10-15x reduction in token usage 🚀

Previously our system naively dumped massive amounts of data to the LLM and hoped it would reason through the noise. Now only the essential information is passed in, cutting down token usage by an order of magnitude.

- Lower costs - fewer tokens mean cheaper API calls

- Faster responses - less data to process means lower latency

2) More Reliable Answers, Less AI Guesswork 🎯

LLMs are powerful, but they tend to get lost when asked to process and interpret massive amounts of data. By shifting the data selection to the database query layer, we free up the AI to focus on what it's great at - summarising information to provide insights.

3) More Scalable and Maintainable system 🏗

With the old approach, every new data retrieval request required writing a new tool manually. That meant endless engineering effort just to support different queries.

Now with the smart query system, we don't need to guess what kind of question a user might ask, and we don't need a separate tool for every possible question - the AI figures it out on its own

4) Freeing up AI to do what it does best 🤖

LLMs shouldn't be used for everything. They're pretty good at summarising, interpreting and reasoning over structured data, but terrible at sifting through massive datasets.

By moving data selection to the database query level, we let the AI focus on insights, trends and reasoning, instead of being a slow expensive data filter.

What next?

Looking ahead there's plenty to explore and refine. Here's what's on our radar:

1) Smarter field selection and query optimisation

Right now the demo only supports a few query operations. And while we've expanded on this in prototypes, it's still only a fraction of the full capabilities within Prisma.

There are ways to get this for free, like skipping ORM entirely and letting AI generate raw SQL to execute... That feels like a bad idea!

2) Handling model relationships more intelligently

Even with just two related models in this demo, we have to do a lot of hand-holding to teach the AI how to query them properly.

We're toying with the approach of one all-knowing sub-agent that understands all models and their relationships and can navigate the hierarchy requesting any field from any model. This does feel like we're moving towards the original naive approach with one massive agent potentially having to process way more data.

We need to find a middle ground where sub-agents remain specialised but are smart enough to navigate relationships efficiently

3) Performance & Scalability Testing

Now that we have a more efficient querying system we need to:

- Benchmark it properly against previous approaches to measure latency and token usage.

- Test it against the previously collected user queries to compare the response quality

- Optimise for production - ensuring this approach doesn't just work in demos but can scale reliably!

4) Building a Seamless User Feedback Loop

In case it gets things wrong, makes a wrong assumption, or chooses the wrong field behind the scenes:

- How do we integrate user feedback into the system without making users deal with the database schemas?

- How do we correct mistakes in real time without adding friction to the user experience?

How This Ties Into Our Mission 🐿️

At Squirrel, this isn’t just about making an AI that can query a database - it’s about fundamentally transforming how hiring works. Every small step we take in refining our AI agents directly helps us achieve the larger vision:

1) Smarter AI = Better Hiring Decisions

A hiring manager’s time is too valuable to be wasted digging through applications or manually piecing together insights from scattered data.

By improving how our AI assistant queries and filters data, we:

✅ Surface the right candidates faster – No more manually sifting through lists.

✅ Provide structured, bias-resistant insights – AI summarizes candidate qualifications instead of dumping raw data.

✅ Make decision-making effortless – AI identifies trends and gaps, helping managers focus on the best applicants.

2) Automating the Tedious Parts of Hiring

Hiring is filled with inefficient, repetitive tasks—searching for candidate information, cross-referencing applications, and tracking interview stages. Our improved AI query system automates all of this.

✅ Instant, natural-language answers – No need to dig through dashboards.

✅ Candidate recommendations based on real data, not guesswork.

✅ An AI-powered hiring assistant that frees up time for meaningful human interactions.

3) Reducing Hiring Friction

Small delays in hiring add up—and they cost companies great talent. Our AI assistant makes every stage of the hiring process smoother:

✅ Less back-and-forth: AI instantly pulls structured insights from candidate data, eliminating unnecessary questions.

✅ More informed conversations: AI provides detailed candidate scorecards so hiring managers don’t start interviews blind.

✅ Faster candidate movement: AI automates status updates, shortlists, and scheduling, keeping hiring workflows moving.

We’re Just Getting Started 💪

This is one step in a much bigger journey toward AI-powered, hands-free hiring.

Now, we want to hear from you:

📢 What kinds of hiring queries would you want an AI assistant to handle?

📢 What’s the biggest pain point in your hiring workflow today?

Your feedback will help shape the future of AI-driven hiring. Drop us a comment, try out the demo, or reach out—we’d love to hear your thoughts.

About Artem Kalikin

Artem is a co-founder and the CTO of Squirrel. Artem is an experienced Engineering Manager with experience building complex AI applications, as well as building great engineering teams.